The brief guide on A/B tests in Dashly

Hello everyone who’s been walking their dogs, laying down at the seaside on vacation, saving the world, or busy with other important duties during our webinar on A/B tests in Dashly. Good news: in this article, we’ve gathered the most important content from the webinar to ensure you feel brave, have all the important information and inspiration to start running A/B tests.

What are A/B tests?

In a nutshell, an A/B test is an experiment for evaluating the performance of a message sent. Within A/B tests, you can compare:

- performance of all kinds of messages;

- performance of various communication channels.

Why do we need them?

A/B Testing helps marketers to learn what appeals most to their target audience to successfully plan their future marketing campaigns/strategies. In this way, you can save your self from a big failure in the long run. It also lets you know what words, phrases, images, and other elements work best for your marketing campaign.

Usama Raudo, Digital Marketing Strategist in Within The Flow

A/B tests are worth involving for three reasons:

1. Quicker hypotheses testing

For example, you’re dissatisfied with the conversion rate of one particular landing page to a lead. Let’s assume that a complete redesign will take nearly a month, require the whole team to be involved, but not guarantee changes in the conversion rate. A/B tests will demonstrate changes in just one or two weeks and require much fewer resources.

Read also:

👉 How to use lead generation chatbot for your website

👉Lead nurturing platform for your revenue growth

👉 12 types of marketing nurture campaigns

2. The statistics behind your decisions

Implement changes being guided by exact numbers and facts like user tracking on website report, not your gut feeling. You can test any page element and see from the A/B test results which option is better than other ones based on the mathematical data.

3. Lots of useful insights

A/B tests will help you understand your audience better, how to deal with it more effectively, and what brings the best results. Channels and values you counted on the most may simply not work.

But are there situations when you don’t need A/B testing?

But if you decided you need to test options after all, we have a solution for you 👇

How to configure an auto message in Dashly chatbot platform?

To configure, go to the Auto messages section, then tap ‘Create Auto Message’ and choose any message from templates or create a new one. When completed, click ‘Create A/B-test’ and create another message which you will compare with. See how to do this:

Read also:

- Attract customers with these 24 ecommerce lead magnets

- The 7 Key Email Marketing Metrics & KPIs You Should Be Tracking

- Product adoption: 7 tips to prove your value

How to evaluate what time an A/B test will take?

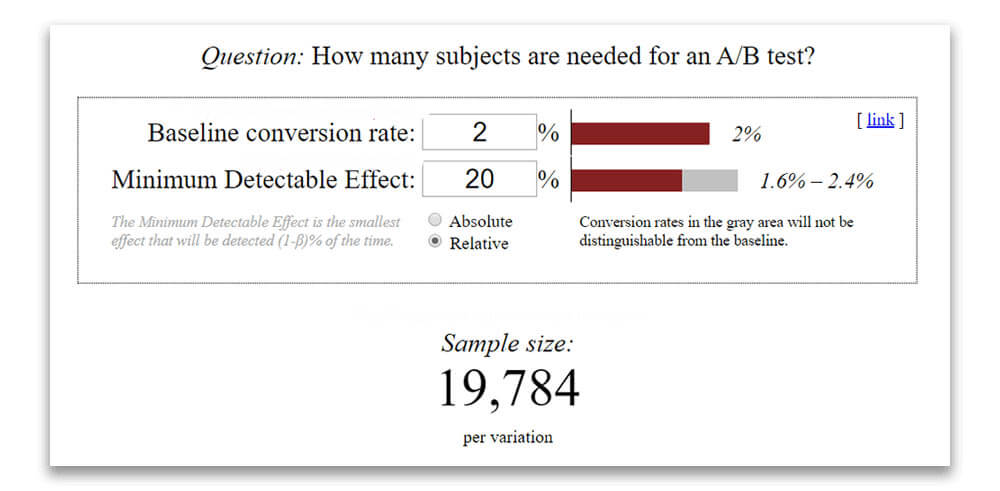

You can evaluate A/B tests using Evan Miller’s calculator.

To see how much time an A/B test will take and if it’s worth the effort, you’ll need your approximate website traffic, your current conversion rate, and the supposed conversion rate increase.

Using Evan Miller’s calculator, you’ll be able to calculate the number of impressions you need to get a statistically significant result.

If you correlate the number of impressions with the approximate website traffic, you’ll be able to see how much time an A/B test will take and if it’s worth your effort.

For example, if an A/B test requires much time, it’s not worth running it at all as your offer may change, and your time and effort will be wasted. It’s better to run short-term A/B tests with message options that are markedly different from each other.

Read also:

Capture emails from website visitors with Pop-ups

The best way to collect emails we tested this year

How to make decisions based on the case results?

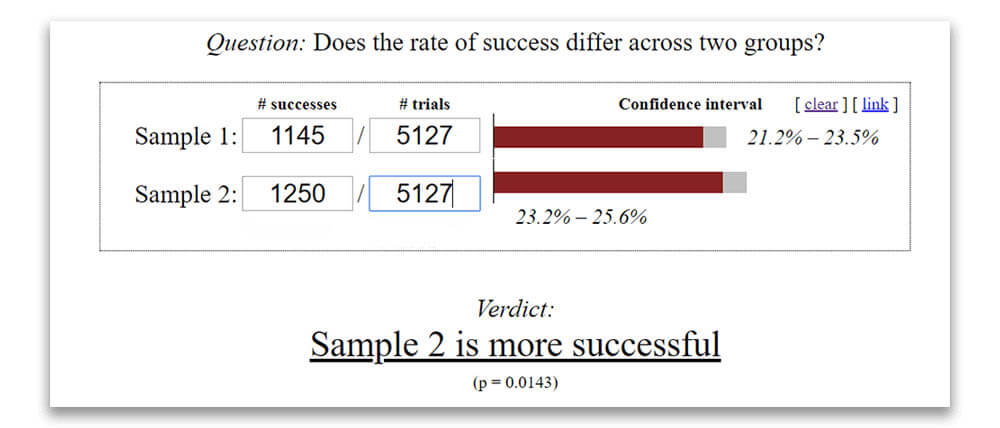

To understand which option performed better, you will need to input the number of impressions and the conversion rate of each option in Evan Miller’s calculator.

Even if the difference seems dramatic at first glance, it doesn’t mean that you’ll have a statistically significant result. If you haven’t reached it, you can estimate how much time it will take, but we recommend keeping any of the options and moving on to other hypotheses.

What’s best to test?

First of all, test customer values and different general messages (various combinations of texts and designs). Such tests will demonstrate more significant conversion rates; they generate statistically significant results quicker, and you’ll have valuable insights soon. Besides, the value you are offering is more important in terms of the growth, not buttons or fonts.

We don’t recommend testing small things like fonts or button colors, as you’ll need much time to obtain a statistically significant result. Changing the button color, waiting for 6 months, and getting a 0.5% difference in the conversion rate are the doubtful perspectives that will not allow your project to grow several-fold.

It makes sense when you’ve run out of all other hypotheses. During these tests, your product or website may change, and the test will become outdated before you get the result.

Thanks! Now check your inbox!

Read also: Find out the best Intercom alternatives and Drift alternative

What other tests are there?

If you’ve tested all hypotheses and the standard A/B tests are not enough, pay heed to other options:

- A/A test. The same message is targeted at two segments to test the homogeneity of traffic. It is used as a run-up to an A/B test, but it takes much time and is of small utility.

- A/A/B test. It is being launched when you suspect the traffic not to be enough homogenous within a certain sample, and the B segment will only provide a trustworthy result when A and A are equal.

- A/B test with a reference group. This is a test within which the audience is divided into three groups: 45% of the audience will see the option A, 45% will see the option B, and 10% will see nothing. You need this test to see if your message actually influences a user or not at all. If your reference group is converting at the same rate or better than others, this is a red flag that the A/B test should be better shut down.

Run successful tests and have high conversion rates!

Read also:

- Customer VS Client — making the difference clear

- Why eCommerce and omnichannel are inseparable?

- How to qualify lead within sales and marketing teams

- Ultimate guide to inbound lead qualification in EdTech marketing

- How to Qualify and Collect More Leads with Lead Bot?

- How BANT sales process can help you get qualified SQL in 2022